Opinion.

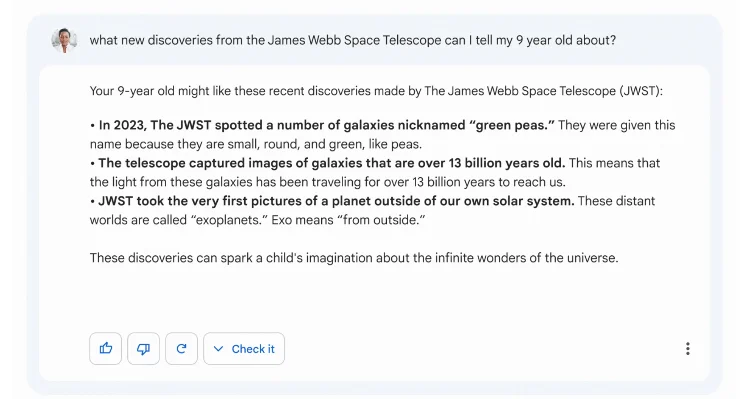

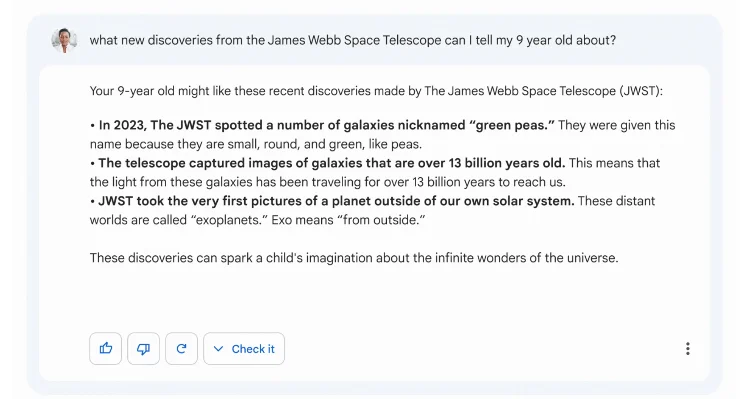

Google’s AI chatbot, Bard was recently outed by Reuters for incorrectly claiming the James Webb Space Telescope was the “very first” to take pictures outside of the Earth’s solar system.

News of the mistake was shared around the same time Google live streamed a press event to promote Bard amongst other technology improvements. Google’s shares dropped US$100 billion shortly after the event as word spread.

The flaw in AI’s fact checking ability only highlights the issue businesses face when using this technology for content creation. OpenAI, the creator of ChatGPT, publicly acknowledges it doesn’t always get its facts right, saying: “ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers.”

What does this mean for business leaders?

We know AI chatbots are here to stay and this is a very exciting moment in our history. It’s understandable that business sees a clear financial benefit in leveraging AI to cut costs and improve productivity. But, as Anthony Caruana and Kathryn Van Kuyk stated earlier this month, relying on ChatGPT to write business copy can be dangerous.

Only yesterday I watched a prominent marketing YouTuber promoting a website built with 100% AI content. When dealing with the issue of fact-checking content during the editing process, his advice was to simply look for numbers and dates within the copy and go off and cross-check them.

It’s a blasé attitude at best, and irresponsible at worst.

For me, the real danger is when people start producing AI content at scale on sensitive topics like mental health or personal finance.

Google’s search quality evaluator guidelines document labels these topics as ‘Your Money or Your Life’ (YMYL) because they “could significantly impact the health, financial stability, or safety of people, or the welfare or well-being of society”.

An example of AI Chatbot content gone wrong

Jon Christian, Managing Editor of Futurism uncovered serious factual errors in an AI-produced article published on Men’s Journal. The publisher was forced to go back and edit the article once Jon’s team alerted them to the inaccuracies. You can see an archived version of the article here.

These topics can be highly lucrative. Publishers build websites based on the topic and fill the content with advertising and affiliate links.

Google is clearly worried about this as Barry Schwartz of Search Engine Land reported when they restated their advice on writing content using AI tools.

It’s a moot point to say the internet is full of misinformation, both unintentional and malicious and it’s our job to make sure we’re getting our information from reliable sources.

The problem is when search engines start surfacing content on sensitive topics that contain factual errors. People accept the content as the truth, and act on the advice.

Bard does not currently cite its sources – something that’s causing a stir online. Here’s Glenn Gabe, US-based SEO Consultant at G-Squared:

In its current experimental form, I don’t see any attribution or citations. No links, no clicks. That’s an act of war against publishers IMO. Let’s see how that goes… NeevAI’s implementation is far better at this point. At least they cite sources with links to those sites. https://t.co/gXrEoCp9ld

— Glenn Gabe (@glenngabe) February 6, 2023

Bard’s lack of citations or attributions also seems counterintuitive to Google’s quality guidelines that state “mild inaccuracies or content from less reliable sources could significantly impact someone’s health”.

Teaching high school English, I saw firsthand how little students knew about cross-checking facts and evaluating sources. With the emergence of AI chatbots, I can imagine educators scrambling to update their learning programs to include more explicit teaching on fact-checking and source evaluation.

While AI chatbots will make our lives easier in ways we can’t yet imagine, the current technology is very much experimental. If you’re using AI to create content for your business, you need to ensure you have strict quality control measures in place around fact-checking.